Including :

The main MuBu object (for multi-buffer) is a multi-track container for sound description and motion capture data. The object imubu is an editor and visualizer of the mubu content. A set of externals for content based real-time interactive audio processing can access to the shared memory of the MuBu container.

MuBu has been initiated by Norbert Schnell in 2008, in order to provide Max with a powerful multi-buffer: a container with multiple synchronized tracks such as audio, sound descriptors, segmentation markers, tags, music scores, and sensors data (see the seminal ICMC article “MUBU & friends”). MuBu was motivated by the need to provide users with generic time-based data structures in Max, with optimised access to shared memory and lock-free real-time signal processing objects that can operate on them. Since its beginning, a powerful editing and visualization tool was also part of the MuBu library, the imubu object developed by Riccardo Borghesi.

This project benefited from the experience gained from several previous projects, such as FTM. Since then, the MuBu library has been expanded by the IRCAM’s ISMM team, constantly adding new functionalities and improving the documentation.

Beyond the original features made available to the Max environment, MuBu brings new ways of thinking about musical interaction. MuBu is the engine beyond all the patches of the Modular Musical Objects, the winner of the 2011 Guthman Prize. Actually, MuBu is our Max playground where our recent research on movement-sound interaction is implemented. For example, MuBu includes not only the gesture follower (gf) but also XMM, our latest set of interactive machine learning objects by Jules Françoise, which is a powerful series of externals for static or dynamic gesture recognition.

Descriptors-based sound synthesis is also included (granular or concatenative): it is now easy to implement CataRT from Diemo Schwarz and colleagues using just 3 mubu externals.

Real-time or offline data processing is implemented using PiPo (see publication at ISMIR 2017): audio descriptors (mfcc, yin, …), wavelet, filters, etc.

A set of Max/MSP externals is available on the Max Package Manager (stable versions), and on the IRCAM Forum (newest and beta versions). Please visit the MuBu Forum page and discussion group.

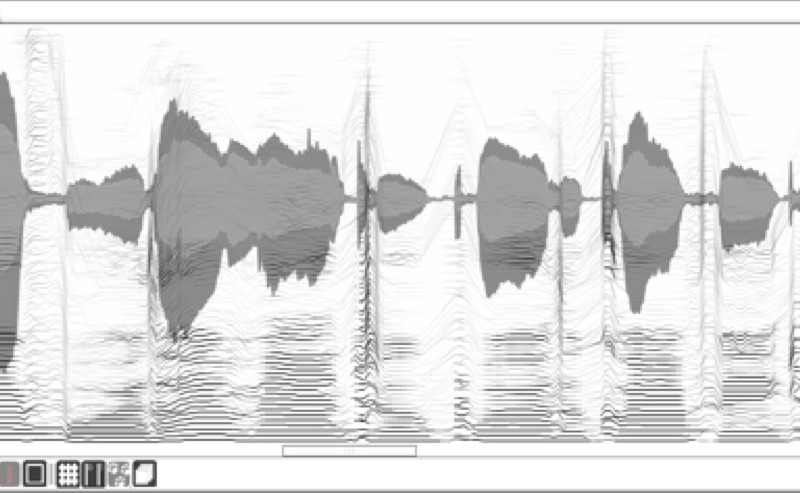

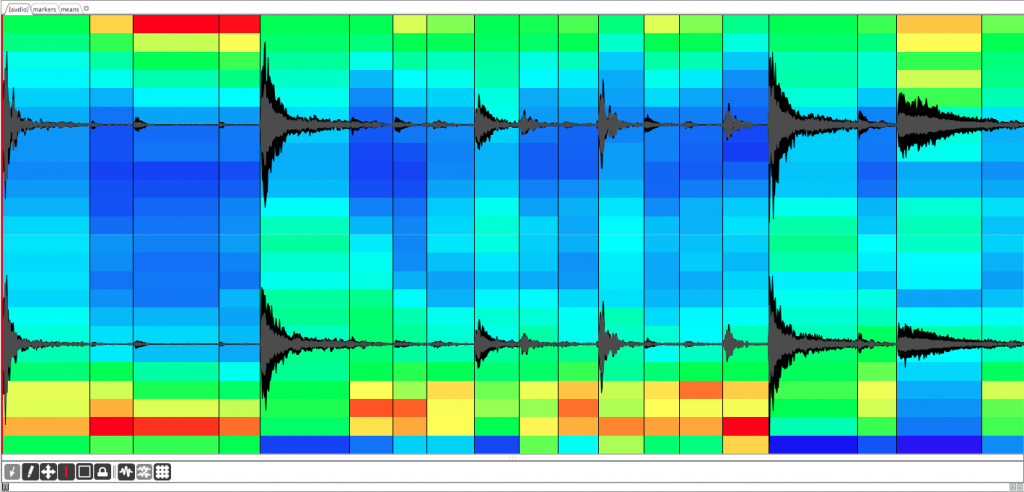

The MuBu graphical module (imubu) displaying a drum loop waveform and Bark coefficients averaged over a segmentation imported from AudioSculpt using SDIF