Conference // Events // News // Software

Dates: 17 – 21 July 2018

Deadline for applications: 31 May 2018

CataRT workshop for Composers, Improvisers, Sound Artists, Performers, Instrumentalists, Media Artists.

Learn how to build your own instrument, installation, composition environment, or sound design tool based on navigating through large masses of sound.

Conference // Events // News // Project // Software

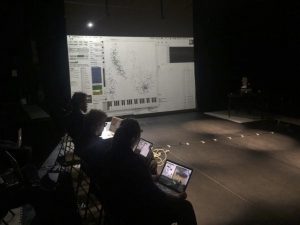

We presented the first version of COMO – Collective Movement, at the International Conference on Movement and Computing, Goldsmiths University of London, 2017.

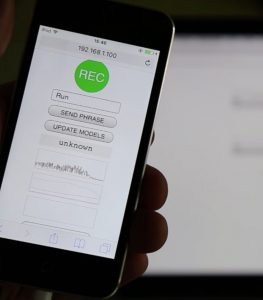

COMO is a collection of prototype Web Apps for movement-based sound interaction, targeting collective interaction using mobile phones.

COMO is based on a software ecosystem developed by IRCAM, using XMM (see in particular xmm-soundworks-template, Collective Soundworks, waves.js. intergrating the RAPID-MIX API.

The idea behind the implementation of COMO is to have a distributed interactive machine learning architecture. It consists of lightweight client-side JavaScript libraries that enable recording gestures and performing analysis/recognition, and of a server performing the training and storage of statistical models.

COLOOP, presented at A Futur en Seine, June 8-10, Grande Halle de la Villette

COLOOP is a connected sequencer, with integrated speakers. The users can use their smartphones to create collectively musical “loops”.

By NoDesign.net and IRCAM (ISMM team), as part of the CoSiMa project

Presentation of the CoSiMa project, June 2 2017, IRCAM, Manifeste Festival

The research project CoSiMa is a part of a powerful movement that, in the past 10 years, has witnessed changes in medias and their relationships with users with the emergence of tangible interfaces, augmented reality, and the web environment. Its aim is to explore these new relationships via new interfaces and collaborative tools for creation based on the latest web and mobile technologies.

The CoSiMa project focused on the production of open tools and an authoring platform accessible for experiments with a large range of applications. Its applications include audiovisual installations, group performances, innovative participative experiences, interactive fictions in the framework of artistic and educational projects, innovative public services, special events, and communication.

Productions from the CoSiMa project (2013-2017): Conference and collective interactive experiences.

ANR project coordinated by IRCAM (UMR STMS Ircam / CNRS / Université Pierre et Marie Curie)

Partners: EnsadLab, ESBA TALM, ID Scenes, No Design, Orbe

Square is a sonorous perambulation for the general public with headphones. Connected to a Website and using geo-localization, each participant dives into an electronic, spatialized universe. A combination of real—a “guided tour” of the place Stravinksy—and electronic fiction through headphones that alters the space, inspired by recordings made in the same location at a different time. A contemplative and cinematographic experience, Square is the result of Lorenzo Bianchi’s artistic research with the Interaction Sound Music Movement team.

For ensemble of mobile terminals, interactive system, and system for binaural holophonic diffusion on headphones

LORENZO BIANCHI HOESCH SQUARE Premiere 2017

dans le cadre de la résidence en recherche artistique autour des installations interactives, sociales et participatives (projet Proxemic Fields)

David Poirier-Quinot, Olivier Warusfel (Acoustic and Cognitive Spaces Team, IRCAM-STMS), Norbert Schnell, Benjamin Matuszewski(Interaction Sound Music Movement Team, IRCAM-STMS) IRCAM Scientific Advisors

Le son au bout des doigts is an installation shown 1.6.–18.6. at the Centre Pompidou and part of Ircam’s Manifeste festival using ISMM’s gesture recognition and interactive sound synthesis technology.

Le son au bout des doigts is an installation shown 1.6.–18.6. at the Centre Pompidou and part of Ircam’s Manifeste festival using ISMM’s gesture recognition and interactive sound synthesis technology.

It offers a playful, interactive, sonorous and visual journey for children ages two and up. Through manipulations and listening, the children’s sight and hearing is solicited. In Topo-phonie Café imagined by B. MacFarlane, the children are guided by a game of organic structures. They set special tables that create sonorous suprises.

With DIRTI developed by User Studio/Matthieu Savary, when children sink their hands in different materials that fill interactive tubs they set off sounds and images. More…

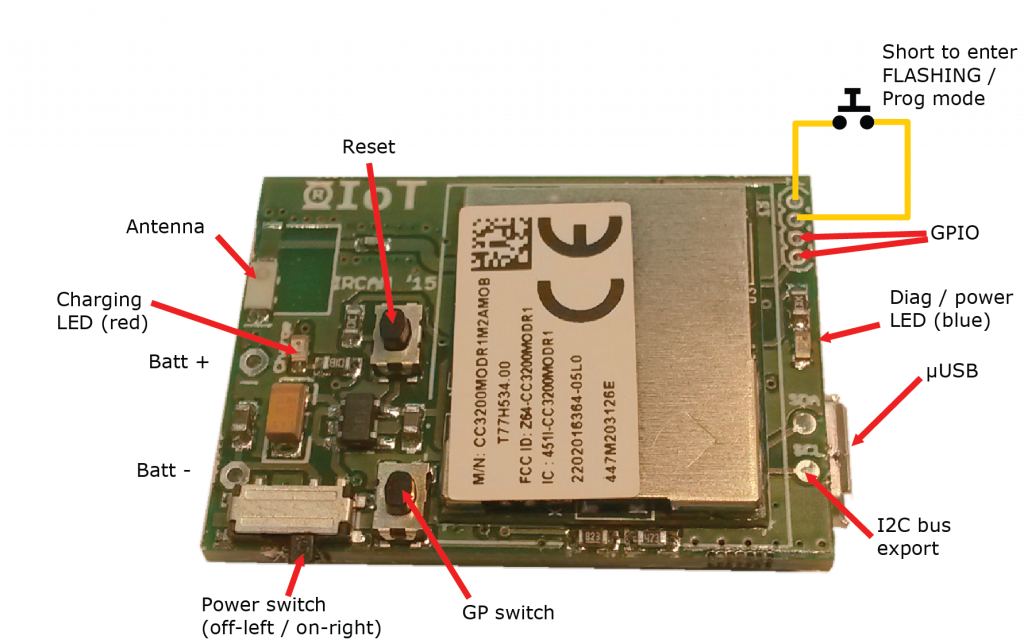

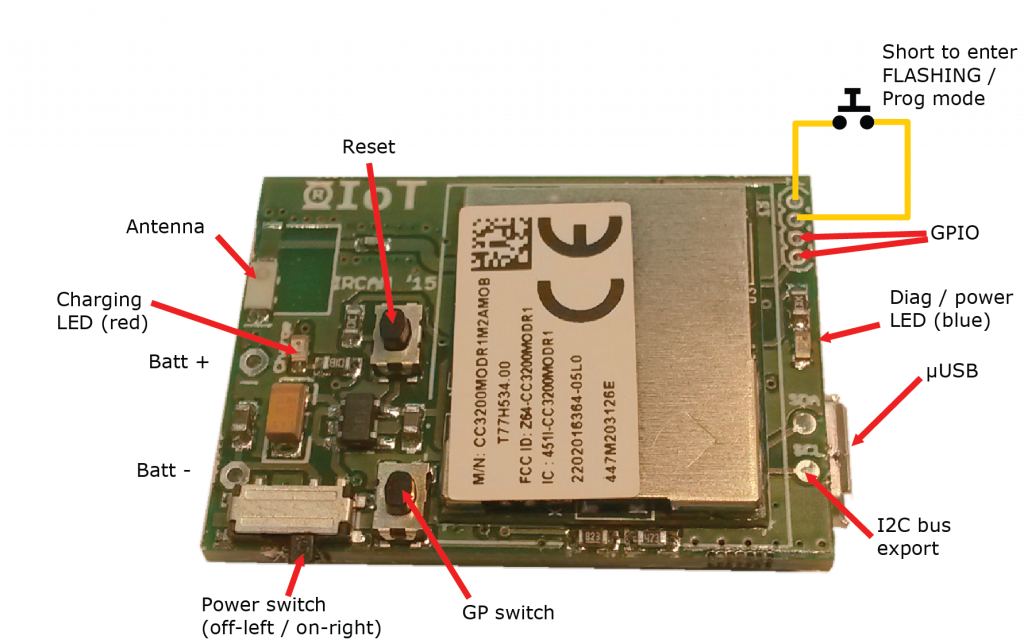

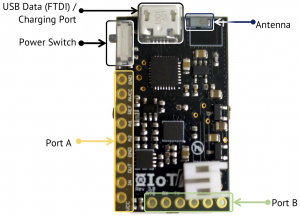

The R-IoT sensor module embeds a ST Microelectronics 9 axis sensor with 3 accelerometers, 3 gyroscopes and 3 magnetometers, all 16 bit. The data are sent wirelessly (WiFi) using the OSC protocol.

The core of the board is a Texas Instrument WiFi module with a 32 bit Cortex ARM processor that execute the program and deals with the Ethernet / WAN stack. It is compatible with TI’s Code Composer and with Energia, a port of the Arduino environment for TI processors.

Processing modules can be found here.

We also provide firmware and processing modules to be uploaded in the RiOT, and Max patches (to use with the free MuBu library), to directly get the data stream into Max and analyse or detect specific motion patterns (free fall, spinning, shaking…).

Codes and Max pataches can be find the following GitHub https://github.com/Ircam-RnD/RIoT.

Energia version 16 should be used to compile and flash the current firmware.

A guide for programming and flashing the R-IoT module is available here : R-IoT Programming & Flashing Guide.

Specs :

How to configure a WiFi Access point to receive data from R-IoT

While it’s possible to connect the computer by WiFi to the AP, we strongly advise to use wired Ethernet as it maximizes the throughput and reduces both the latency and data jitter.

When taken straight out of the box, the AP is usually configured with DHCP enabled for clients and with a fixed IP address which can be (most of the time) 192.168.1.1 or 192.168.0.1 or in the case or the mini TP-link 3G/Wifi router, 192.168.0.254.

Set your computer in DHCP (aka “obtain an IP automatically”) and connect it to the router by wired ethernet. Based on the address type you’ll receive (either 1.xxx or 0.xxx) connect to either 192.168.1.1 or 192.168.0.1 or… RTFM ;-) Sometimes DHCP is disabled by default, and a manually set IP address must be assigned to the computer using the same scheme of the router’s default address. In the case of the small TP-link pocket router above, the computer can be set to 192.168.0.100 to first access the admin page located on 192.168.0.254.

When accessing the AP, you’ll be prompted for a login and password, the provided routers are left with admin / admin. Once in the administration page of the access point, there are only a few things to setup. All those things can be changed and tuned to match any equipment, we’re just covering here the basics.

– The default configuration of R-IoT is to connect to a computer with the fixed IP address 192.168.1.100. If the router / AP configuration is set to 192.168.0.1, there will be an address mismatch, therefore go in the Network -> LAN page and change the AP address to 192.168.1.1 or 192.168.1.254 which is kind of standard

That will usually require a reboot of the AP, proceed and log in once again.

– Tune the DHCP settings. Most of the time the DHCP will be active on addresses from 192.168.1.100 to 199. Change this from 110 to 150, anything to avoid having the default destination IP address (192.168.1.100) to be in the DHCP lease pit. Alternatively you can leave it in the DHCP area and reserve the address based on the MAC address of your computer (see DHCP address reservation)

– Finally go in the Wireless settings, change the SSID of your network. Each provided module has a sticker on with with its serial #. Each number matches a SSID name “riotXX” (module number 5 wants to connect to riot5). Select a wifi channel that is possibly different than your neighbor. Finally, go in the security section and disable encryption (WPA2 encryption is supported but not recommended on first use).

Once the router is configured with this 192.168.1.xxx address scheme and DHCP, the computer can be set to 192.168.1.100, the default destination address the R-IoT module sends data to.

For #MFT scandi, we had pre configured alls provided pocket routers. The can be already accessed via 192.168.1.254 and have a SSID / wifi network name matching the # of the provided R-IoT module (riot3 for module #3 etc). Overall, there’s no standard for naming your SSID, the only requirement is to have the R-IoT SSID name configuration matching the AP SSID.

The WiFi radio channel can be adjusted in order to limit bandwith occupation. Each router has been assigned to a different channel (double check). Use a scanner or just use any WiFi survey app out there to diagnose the RF space around your spot and select the less busy channel.

Accessing the Web Server for configuring the module

Currently, the IP address type remains DHCP in the current version of the firmware until Energia stabilizes its API, and security isn’t implemented yet neither. The main parameters that can be adjusted are the IP address of the computer to connect to (DEST IP), the UDP Port, the ID of the module and the sample rate (min 3 ms).

The ID is used to compose the Open Sound Control address scheme which looks like /<ID>/<stream> followed by a data list. When using several modules on the same WiFi access point, the best practice is to :

Once the module configuration is finished, press the submit button, settings will be saved in the non volatile memory of the module that then has to be rebooted to apply the new configuration.

Receive sensors data from the module

Below is an data receiving in max-msp. The module currently forges 3 OSC packets

Original R-IoT

The R-IoT module allows for sensing movement, processing and wireless transmission through WiFi. It is developed as an essential brick towards creating novel motion-based musical instruments and body-based media interaction.

The R-IoT sensor module embeds a ST Microelectronics 9 axis sensor with 3 accelerometers, 3 gyroscopes and 3 magnetometers, all 16 bit. The data are sent wirelessly (WiFi) using the OSC protocol.

Bitalino R-IoT

The core of the board is a Texas Instrument WiFi module with a 32 bit Cortex ARM processor that execute the program and deals with the Ethernet / WAN stack. It is compatible with TI’s Code Composer and with Energia, a port of the Arduino environment for TI processors.

A commercial version is available by Bitalino

More information:

This R-IoT has been developed initially by the ISMM and PiP teams at IRCAM-Centre Pompidou (STMS Lab IRCAM-CNRS-UPMC) within the CoSiMa project (funded by ANR) and the H2020 MusicBricks and Rapid-Mix projects funded by the European Union’s Horizon 2020 research and innovation programme.

The Bitalino R-IoT has been developed as a collaboration between IRCAM and PluX, with the support of from the European Union’s Horizon 2020 research and innovation programme under grant agreement N°644862 RAPID-MIX.

On February 6th, 7th and 8th, La Ville de Paris and the À Suivre association organized the 4th edition of Paris Face Cachée, which aims at proposing original and off-the-wall ways to discover the city. The CoSiMa team led the workshops Expérimentations sonores held at IRCAM. More…

This workshop/symposium marks the end of the Legos project, which focused on sensori-motor learning in gesture-sound interactive system. The goal of this workshop is to report the major results, show demonstrations and discuss current trends and emerging applications (such as rehabilitation, music, sport, wellbeing) with international invited researchers.

The event is free, but reservation is required, please email bevilacqua at ircam dot fr. More…