Conference // Events // News // Software

Dates: 17 – 21 July 2018

Deadline for applications: 31 May 2018

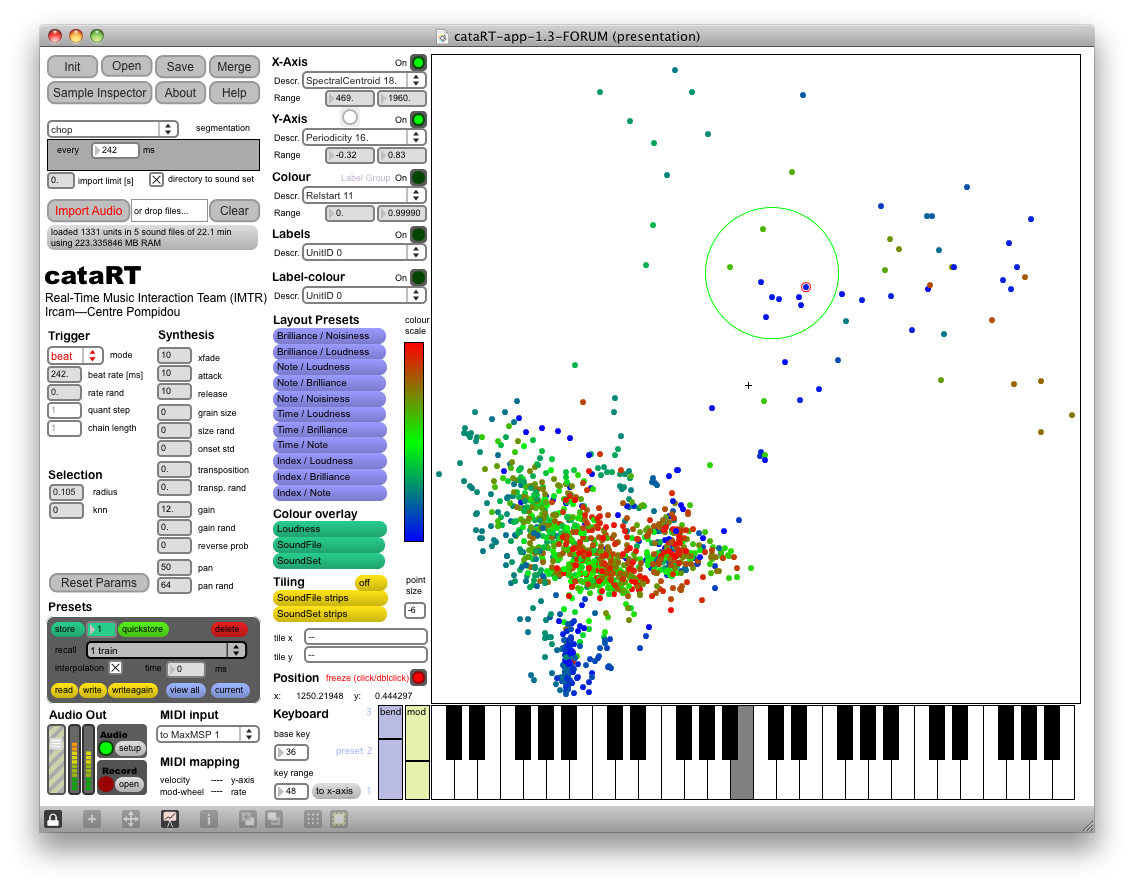

CataRT workshop for Composers, Improvisers, Sound Artists, Performers, Instrumentalists, Media Artists.

Learn how to build your own instrument, installation, composition environment, or sound design tool based on navigating through large masses of sound.

Conference // Events // News // Project // Software

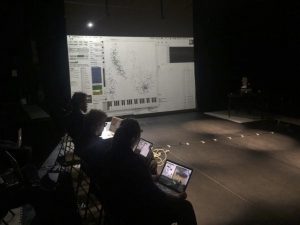

We presented the first version of COMO – Collective Movement, at the International Conference on Movement and Computing, Goldsmiths University of London, 2017.

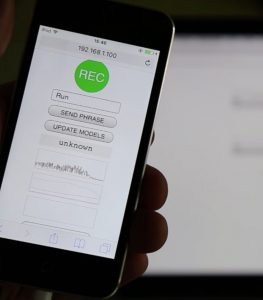

COMO is a collection of prototype Web Apps for movement-based sound interaction, targeting collective interaction using mobile phones.

COMO is based on a software ecosystem developed by IRCAM, using XMM (see in particular xmm-soundworks-template, Collective Soundworks, waves.js. intergrating the RAPID-MIX API.

The idea behind the implementation of COMO is to have a distributed interactive machine learning architecture. It consists of lightweight client-side JavaScript libraries that enable recording gestures and performing analysis/recognition, and of a server performing the training and storage of statistical models.

Update: the PiPo SDK v0.2 is now available on Ircam R&D‘s GitHub!

The PiPo module API for writing your own processing and analysis objects is now available and documented here: http://recherche.ircam.fr/equipes/temps-reel/mubu/pipo

It includes an example xcode project to build a simple pipo mxo for Max that also works within MuBu.

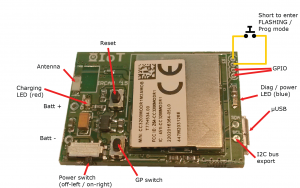

At the #MTFScandi, presenting at our new wifi R-IoT sensor module with 9 axis sensor with 3 accelerometers, 3 gyroscopes and 3 magnetometers, all 16 bit, along with real-time sensor analysis tools, in the context of the MusicBricks project More…

MaD allows for simple and intuitive design of continuous sonic gestural interaction. The motion-sound mapping is automatically learned by the system when movement and sound examples are jointly recorded. In particular, our applications focus on using vocal sounds – recorded while performing action, – as primary material for interaction design. The system integrates of specific probabilistic models with hybrid sound synthesis models. Importantly, the system is independent to the type of motion/gesture sensing devices, and can directly accommodate the use different sensors such as, cameras, contact microphones, and inertial measurement units. The application concerns performing arts, gaming but also medical applications such auditory-aided rehabilitation. More…

We developed a new object for using the Leap Motion in Max, based on the Leap Motion SDK V2 Skeletal Tracking Beta. More…

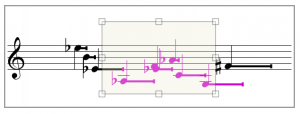

The piece Five Out of Six by Christopher Trapani for small ensemble and electronics won the the ICMC 2014 Best Piece Award (Americas).

The piece makes extensive use of CataRT to play precise microtonal chords with lively timbres More…

We just released the beta version of mubu.*mm, a set of objects for probabilistic modeling of motion and sound relationships. More…