Conference // Events // News // Software

Dates: 17 – 21 July 2018

Deadline for applications: 31 May 2018

CataRT workshop for Composers, Improvisers, Sound Artists, Performers, Instrumentalists, Media Artists.

Learn how to build your own instrument, installation, composition environment, or sound design tool based on navigating through large masses of sound.

Update: the PiPo SDK v0.2 is now available on Ircam R&D‘s GitHub!

The PiPo module API for writing your own processing and analysis objects is now available and documented here: http://recherche.ircam.fr/equipes/temps-reel/mubu/pipo

It includes an example xcode project to build a simple pipo mxo for Max that also works within MuBu.

MaD allows for simple and intuitive design of continuous sonic gestural interaction. The motion-sound mapping is automatically learned by the system when movement and sound examples are jointly recorded. In particular, our applications focus on using vocal sounds – recorded while performing action, – as primary material for interaction design. The system integrates of specific probabilistic models with hybrid sound synthesis models. Importantly, the system is independent to the type of motion/gesture sensing devices, and can directly accommodate the use different sensors such as, cameras, contact microphones, and inertial measurement units. The application concerns performing arts, gaming but also medical applications such auditory-aided rehabilitation. More…

Gestural sonic interaction: playing with a virtual water tub. Sound samples are controlled with hand movements, by splashing water or sweeping under the surface.

Conception: Eric O. Boyer (Ircam & LPP), Sylvain Hanneton (LPP), Frédéric Bevilacqua (Ircam)

Technology: MuBu and LeapMotion Max object, ISMM team at Ircam-STMS CNRS UPMC

Sound materials: Roland Cahen & Diemo Schwarz – Topophonie project

With the support of ANR LEGOS project.

hand tracking, LEGOS, max, movement, mubu, Topophonie, water

We developed a new object for using the Leap Motion in Max, based on the Leap Motion SDK V2 Skeletal Tracking Beta. More…

We just released the beta version of mubu.*mm, a set of objects for probabilistic modeling of motion and sound relationships. More…

Including :

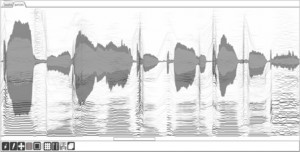

The main MuBu object (for multi-buffer) is a multi-track container for sound description and motion capture data. The object imubu is an editor and visualizer of the mubu content. A set of externals for content based real-time interactive audio processing can access to the shared memory of the MuBu container.